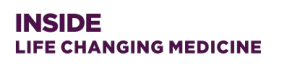

In a paper published in Nature Neuroscience last week, University of Pittsburgh researchers described how reward signals in the brain are modulated by uncertainty. Dopamine signals are intertwined with reward learning; they teach the brain which cues or actions predict the best rewards. New findings from the Stauffer lab at Pitt School of Medicine indicate that dopamine signals also reflect the certainty surrounding reward predictions.

Kathryn (Kati) Rothenhoefer

In short, dopamine signals might teach the brain about the likelihood of getting a reward.

The authors of the study included three graduate students Kathryn (Kati) Rothenhoefer, Aydin Alikaya and Tao Hong, as well as assistant professor of neurobiology Dr. William Stauffer.

Rothenhoefer (KR) and Stauffer (WS) shared their take on the key messages their work reveals about the inner workings of the brain.

Briefly, what is the background for this study?

KR: We were studying ambiguity – a complex environmental factor that makes it hard for humans and animals to know what to predict – and this project was a cool detour that arose organically from our preliminary data. We found something interesting that we were not expecting, and we saw it to completion.

WS: Dopamine neurons are crucial for reward learning. Dopamine neurons are activated by rewards that are better than predicted and suppressed by rewards that are worse than predicted. This pattern of activity is reminiscent of “reward prediction errors,” the differences between received and predicted rewards.

Dr. William Stauffer

Reward prediction errors are crucial to animal and machine learning. However, in classical animal and machine learning theories, ‘predicted rewards’ are simply the average value of past outcomes. Although these predictions are useful, it would be much more useful to predict average values as well as more complex statistics that reflect uncertainty. Therefore, we wanted to know whether dopamine teaching signals reflect those more complex statistics, and whether they could be used to teach the brain about real-world incentives.

What are the main findings of your work?

WS: The main finding is that rare rewards amplify dopamine responses compared to identically sized rewards that are delivered with greater frequency. This implies that predictive neuronal signals reflect uncertainty surrounding predictions and not just the predicted values. It also means that one of the main reward learning systems in the brain can estimate uncertainty and potentially teach downstream brain structures about that uncertainty.

Which findings made you personally excited?

KR: This was the first neural data set I have ever collected, so just to have such clear results showing that dopamine neurons are more complex than we have thought in the past was really awesome. It’s not every day that one is able to add novel information to a long-accepted dogma in the literature.

WS: Computers are used as an analogy for understanding the brain and its functions. However, many neuroscientists believe that computation is more than an analogy. At the most basic level, the brain is an information processing system. I study dopamine neurons because we can ‘see’ the brain performing mathematical computations. There are few other neural systems where we have such direct evidence of the algorithmic nature of neuronal responses. It is simply fascinating, and these results indicate a new aspect of that algorithm. Namely that reward learning systems adapt to uncertainty.

What recommendations do you have for future research as a result of this study?

KR: I’m excited to get back to our originally intended project, which is how the brain deals with ambiguous choices. This will integrate what we now know about how dopamine neurons code information about complex reward environments with what decision-makers believe about ambiguous choices, and how they choose to make decisions in these contexts.

WS: The reason we did the study is because I am interested in understanding how beliefs about probability are applied to choices under ambiguity. Under ambiguity, economic decisions are made without knowing the outcome probabilities. Therefore, decision-makers are forced to apply their beliefs about probabilities to make choices. We did this study as a first step to understanding how value and reward probability distributions are coded in the brain, and what form these beliefs can take. With these results in hand, we will now get back to studying choices! Nevertheless, I bet these results have far-reaching implications for biological and artificial intelligence-based learning systems.